In Fourier Transform, any signal can be represented by a sum of sinusoids (your basis functions) multiplied by coefficients. This concept of representing a signal with basis functions and proper coefficients is similar to PCA. In this case we will be doing it for images.

First I choose an image:

and convert it to grayscale:

Then we cut up the image into 10 x 10 blocks and reshape this block into a 1-D array. We do this for the rest of the image and we'll have an n x p matrix where n is the number of 10 x 10 blocks and p is the number of elements per block (100 in this case). Next we apply PCA using the "pca" function in scilab.

correlation circle

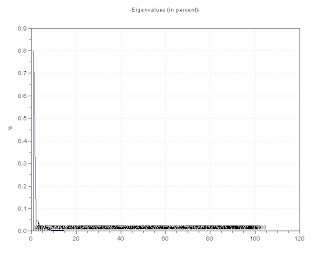

The "pca" function gives 3 output - the eigenvalues, the eigenvectors, and the coefficients. The image can be reconstructed from these and its quality depends on the number of eigenvectors used (i.e. the amount of compression).

And the reconstructions are:

using 2 out of 100 eigenvectors. (cumsum of 85.5% for eigenvalues)Here the faces of the people are unrecognizable. (1.36 MB)

using 2 out of 100 eigenvectors. (cumsum of 85.5% for eigenvalues)Here the faces of the people are unrecognizable. (1.36 MB) using 4 out of 100 eigenvectors. (cumsum of 91% for eigenvalues)Some faces begin to be a little recognizable (1.44 MB)

using 4 out of 100 eigenvectors. (cumsum of 91% for eigenvalues)Some faces begin to be a little recognizable (1.44 MB)We can see that using only a few basis to reconstruct will result in a very poor and lossy quality of the image. The images using only 1, 2 and 4 eigenvectors look blocky and the high frequency features such as the faces are missing or blurred. As we increase the number of eigenvectors used, the quality becomes better and better and the minute features are showing up. At 10 eigenvectors the faces are quite recognizable and the image begins to look "ok". At 48 out of 100 eigenvectors the reconstructed image is decent and losses are not noticeable. Using all the eigenvectors, we get the same as the grayscale image. Also I should mention that when I used imwrite to save the images, the file size increases as i use more eigenvectors which is intuitive, however the file sizes are even bigger than the original image. And when all the the eigenvectors are used, the file size is the same as that of the original image.

What we did here was to use PCA on a grayscale image. True color images consists of 3 planes (RGB) and we tried applying PCA on each of the individual planes, and then adding them up in the end:

using all the eigenvectors for each plane (RGB)

As fewer eigenvectors are used, the image blurs and the colors also fade. However, there's a slight difference between the blue colors of the jacket in the reconstruction using all eigenvectors and the original image...maybe because of the scaling when using imwrite?

Score: 10/10

Lastly I would like to acknowledge Dr Soriano, Arvin Mabilangan, Joseph Bunao, Andy Polinar, Brian Aganggan and BA Racoma for the helpful and insightful discussions. :D

- Dennis

References:

1. M. Soriano, "Image Compression"

No comments:

Post a Comment